Flannel CNI(Container Network Interface)

- Flannel runs a small, single binary agent called

flanneldon each host, and is responsible for allocating a subnet lease to each host out of a larger, preconfigured address space. Flannel은 각 호스트에서flanneld라는 작은 단일 바이너리 에이전트를 실행하고 미리 구성된 더 큰 주소 공간에서 각 호스트에 서브넷 임대를 할당하는 역할을 합니다. → 모든 노드에flanneld가 동작 - 네트워킹 환경 지원 (Backends) : VXLAN, host-gw, UDP, 그외에는 아직 실험적임 - 링크 링크2

VXLAN(권장) : Port(UDP 8472), DirecRouting 지원(같은 서브넷 노드와는 host-gw 처럼 동작)- 단, 네트워크 엔지니어분들이 알고 있는 ‘L2 확장’ 이 아니라, 각 노드마다 별도의 서브넷이 있고, 해당 서브넷 대역끼리 NAT 없이 라우팅 처리됨

- host-gw : 호스트 L2 모드?, 일반적으로 퍼블릭 클라우드 환경에서는 동작하지 않는다

- UDP (비권장) : VXLAN 지원하지 않는 오래된 커널 사용 시, Port(UDP 8285)

- 노드마다 flannel.1 생성 : VXLAN VTEP 역할

- 노드마다 cni0 생성 : bridge 역할

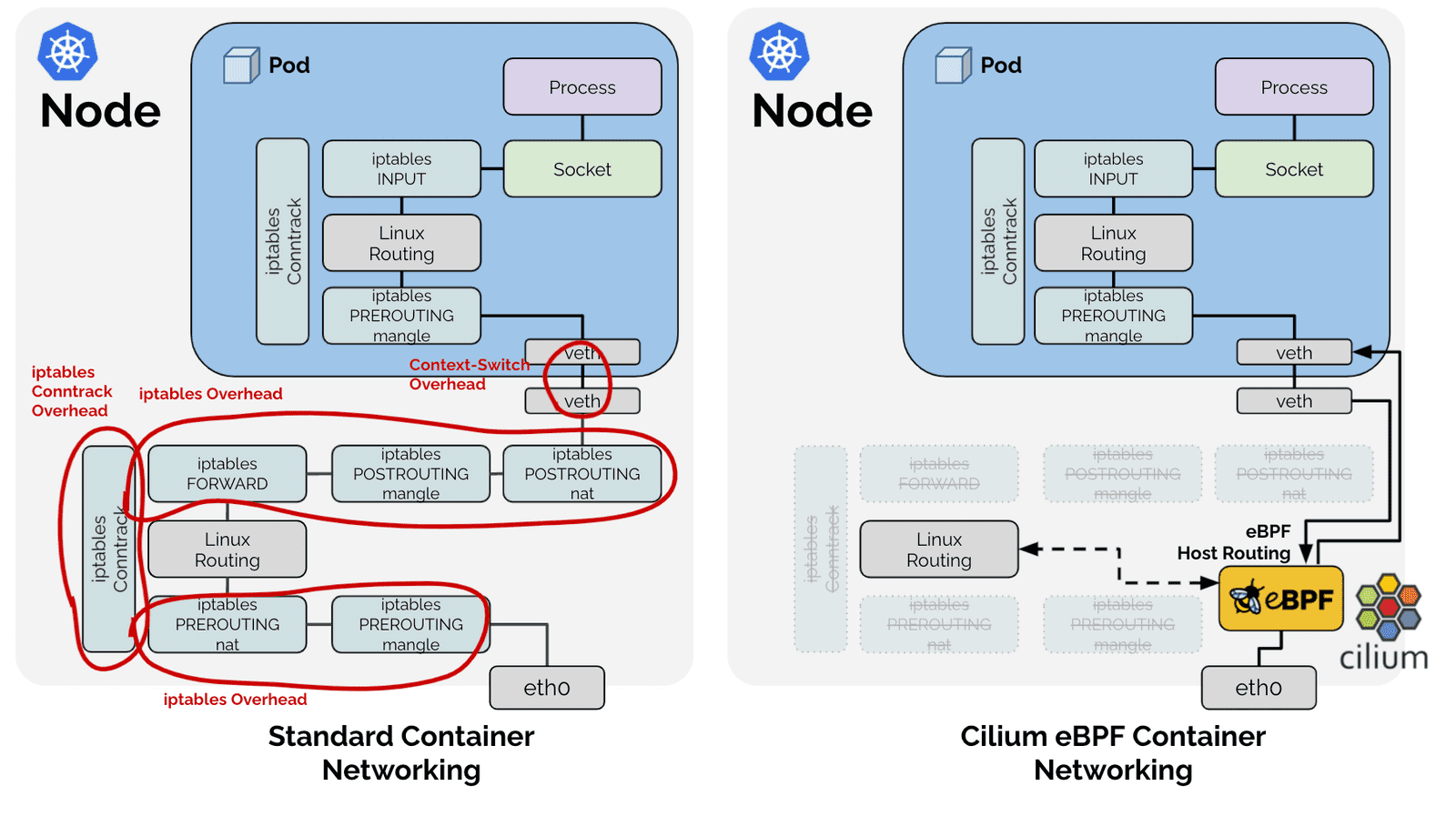

Flannel CIN 및 CNI 동작 그림 설명

](/assets/img/post_img/kang_2week_12.png)

](/assets/img/post_img/kang_2week_13.png)

](/assets/img/post_img/kang_2week_14.png)

https://cilium.io/blog/2021/05/11/cni-benchmark

](/assets/img/post_img/kang_2week_16.png)

https://docs.openshift.com/container-platform/3.4/architecture/additional_concepts/flannel.html

Flannel 기본 정보 확인

# 컨피그맵과 데몬셋 정보 확인

$ kubectl describe cm -n kube-system kube-flannel-cfg

$ kubectl describe ds -n kube-system kube-flannel-ds

# flannel 정보 확인 : 대역, MTU

$ cat /run/flannel/subnet.env

FLANNEL_NETWORK=172.16.0.0/16

FLANNEL_SUBNET=172.16.0.1/24

FLANNEL_MTU=1450

FLANNEL_IPMASQ=true

# 워커 노드마다 할당된 dedicated subnet (podCIDR) 확인

$ kubectl get nodes -o jsonpath='{.items[*].spec.podCIDR}' ;echo

172.16.0.0/24 172.16.1.0/24 172.16.2.0/24

$ kubectl get node k8s-m -o json | jq '.spec.podCIDR'

"172.16.0.0/24"

$ kubectl get node k8s-w1 -o json | jq '.spec.podCIDR'

"172.16.1.0/24"

$ kubectl get node k8s-w2 -o json | jq '.spec.podCIDR'

"172.16.2.0/24"

# 기본 네트워크 정보 확인

$ ip -c -br addr

$ ip -c link | grep -E 'flannel|cni|veth' -A1

$ ip -c addr

$ ip -c -d addr show cni0

$ ip -c -d addr show flannel.1

# 라우팅 정보 확인

$ ip -c route | grep 172.16.

172.16.0.0/24 dev cni0 proto kernel scope link src 172.16.0.1

172.16.1.0/24 via 172.16.1.0 dev flannel.1 onlink

172.16.2.0/24 via 172.16.2.0 dev flannel.1 onlink

$ route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 10.0.2.2 0.0.0.0 UG 100 0 0 enp0s3

10.0.2.0 0.0.0.0 255.255.255.0 U 0 0 0 enp0s3

10.0.2.2 0.0.0.0 255.255.255.255 UH 100 0 0 enp0s3

172.16.0.0 0.0.0.0 255.255.255.0 U 0 0 0 cni0

172.16.1.0 172.16.1.0 255.255.255.0 UG 0 0 0 flannel.1

172.16.2.0 172.16.2.0 255.255.255.0 UG 0 0 0 flannel.1

172.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker0

192.168.100.0 0.0.0.0 255.255.255.0 U 0 0 0 enp0s8

# 타 노드의 파드 IP 대역과 통신하기 위한 Next-Hop IP와 통신 확인(VTEP IP == flannel.1)

$ ping -c 1 172.16.1.0

PING 172.16.1.0 (172.16.1.0) 56(84) bytes of data.

64 bytes from 172.16.1.0: icmp_seq=1 ttl=64 time=20.4 ms

--- 172.16.1.0 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 20.376/20.376/20.376/0.000 ms

$ ping -c 1 172.16.2.0

PING 172.16.2.0 (172.16.2.0) 56(84) bytes of data.

64 bytes from 172.16.2.0: icmp_seq=1 ttl=64 time=13.4 ms

--- 172.16.2.0 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 13.352/13.352/13.352/0.000 ms

# arp table, mac 정보, bridge 정보 등 확인

## 아래 mac 정보는 다른 노드들의 flannel.1 의 mac 주소 입니다

$ ip -c neigh show dev flannel.1

172.16.2.0 lladdr 86:8f:47:89:1b:da PERMANENT

172.16.1.0 lladdr 1e:af:73:b0:c0:c3 PERMANENT

bridge fdb show dev flannel.1

76:4d:5d:82:42:b7 dst 192.168.100.102 self permanent

1e:af:73:b0:c0:c3 dst 192.168.100.101 self permanent

86:8f:47:89:1b:da dst 192.168.100.102 self permanent

16:43:ee:68:3c:08 dst 192.168.100.101 self permanent

$ ip -c -d addr show flannel.1

5: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN group default

link/ether 42:44:84:8c:89:dd brd ff:ff:ff:ff:ff:ff promiscuity 0 minmtu 68 maxmtu 65535

vxlan id 1 local 192.168.100.10 dev enp0s8 srcport 0 0 dstport 8472 nolearning ttl auto ageing 300 udpcsum noudp6zerocsumtx noudp6zerocsumrx numtxqueues 1 numrxqueues 1 gso_max_size 65536 gso_max_segs 65535

inet 172.16.0.0/32 brd 172.16.0.0 scope global flannel.1

valid_lft forever preferred_lft forever

inet6 fe80::4044:84ff:fe8c:89dd/64 scope link

valid_lft forever preferred_lft forever

$ kubectl describe node | grep VtepMAC

Annotations: flannel.alpha.coreos.com/backend-data: {"VNI":1,"VtepMAC":"42:44:84:8c:89:dd"}

Annotations: flannel.alpha.coreos.com/backend-data: {"VNI":1,"VtepMAC":"1e:af:73:b0:c0:c3"}

Annotations: flannel.alpha.coreos.com/backend-data: {"VNI":1,"VtepMAC":"86:8f:47:89:1b:da"}

$ bridge vlan show

port vlan ids

docker0 1 PVID Egress Untagged

cni0 1 PVID Egress Untagged

vethe914fc0b 1 PVID Egress Untagged

veth09e7e37a 1 PVID Egress Untagged

$ tree /var/lib/cni/networks/cbr0

/var/lib/cni/networks/cbr0

├── 172.16.0.6

├── 172.16.0.7

├── last_reserved_ip.0

└── lock

0 directories, 4 files

# iptables 정보 확인

$ iptables -t filter -S | grep 172.16.0.0

-A FORWARD -s 172.16.0.0/16 -j ACCEPT

-A FORWARD -d 172.16.0.0/16 -j ACCEPT

$ iptables -t nat -S | grep 'A POSTROUTING'

-A POSTROUTING -m comment --comment "kubernetes postrouting rules" -j KUBE-POSTROUTING

-A POSTROUTING -s 172.17.0.0/16 ! -o docker0 -j MASQUERADE

-A POSTROUTING -s 172.16.0.0/16 -d 172.16.0.0/16 -j RETURN

-A POSTROUTING -s 172.16.0.0/16 ! -d 224.0.0.0/4 -j MASQUERADE --random-fully

-A POSTROUTING ! -s 172.16.0.0/16 -d 172.16.0.0/24 -j RETURN

-A POSTROUTING ! -s 172.16.0.0/16 -d 172.16.0.0/16 -j MASQUERADE --random-fully

# 노드 정보 중 flannel 관련 정보 확인

$ kubectl describe node | grep -A3 Annotations

Annotations: flannel.alpha.coreos.com/backend-data: {"VNI":1,"VtepMAC":"42:44:84:8c:89:dd"}

flannel.alpha.coreos.com/backend-type: vxlan

flannel.alpha.coreos.com/kube-subnet-manager: true

flannel.alpha.coreos.com/public-ip: 192.168.100.10

--

Annotations: flannel.alpha.coreos.com/backend-data: {"VNI":1,"VtepMAC":"1e:af:73:b0:c0:c3"}

flannel.alpha.coreos.com/backend-type: vxlan

flannel.alpha.coreos.com/kube-subnet-manager: true

flannel.alpha.coreos.com/public-ip: 192.168.100.101

--

Annotations: flannel.alpha.coreos.com/backend-data: {"VNI":1,"VtepMAC":"86:8f:47:89:1b:da"}

flannel.alpha.coreos.com/backend-type: vxlan

flannel.alpha.coreos.com/kube-subnet-manager: true

flannel.alpha.coreos.com/public-ip: 192.168.100.102

# flannel 파드 이름 확인

$ kubectl get pod -n kube-system -l app=flannel -o name

pod/kube-flannel-ds-8tmnm

pod/kube-flannel-ds-9v9k6

pod/kube-flannel-ds-vxzwm

# flannel 파드에 ps 정보 확인

$ kubectl exec -it -n kube-system pod/kube-flannel-ds-8tmnm -- ps | head -2

Defaulted container "kube-flannel" out of: kube-flannel, install-cni-plugin (init), install-cni (init)

PID USER TIME COMMAND

1 root 5:46 /opt/bin/flanneld --ip-masq --kube-subnet-mgr --iface=enp0

# flannel 파드들의 로그 확인

$ kubens kube-system && kubetail -l app=flannel --since 1h

Context "admin-k8s" modified.

Active namespace is "kube-system".

Will tail 9 logs...

kube-flannel-ds-8tmnm kube-flannel

kube-flannel-ds-8tmnm install-cni-plugin

kube-flannel-ds-8tmnm install-cni

kube-flannel-ds-9v9k6 kube-flannel

kube-flannel-ds-9v9k6 install-cni-plugin

kube-flannel-ds-9v9k6 install-cni

kube-flannel-ds-vxzwm kube-flannel

kube-flannel-ds-vxzwm install-cni-plugin

kube-flannel-ds-vxzwm install-cni

$ kubens -

Context "admin-k8s" modified.

Active namespace is "default".

# 네트워크 네임스페이스 확인

$ lsns -t net

NS TYPE NPROCS PID USER NETNSID NSFS COMMAND

4026531992 net 169 1 root unassigned /run/docker/netns/default /lib/systemd/systemd --system --deserialize 35

4026532237 net 2 6681 65535 0 /run/docker/netns/38f220b48dc5 /pause

4026532308 net 2 6730 65535 1 /run/docker/netns/646295f1959d /pause

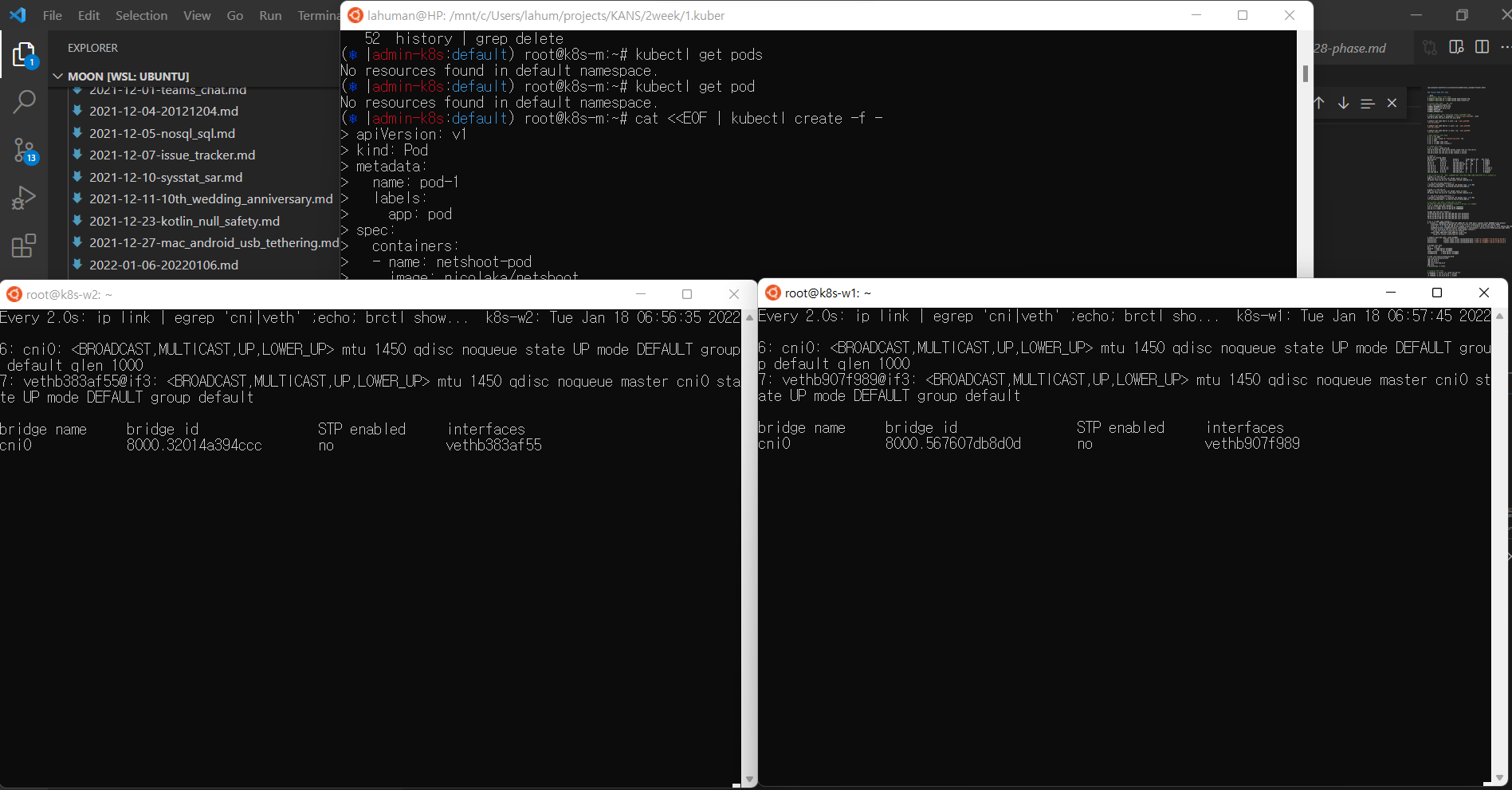

파드 2개 생성

# 워커 노드1,2 - 모니터링

$ watch -d "ip link | egrep 'cni|veth' ;echo; brctl show cni0"

# cat & here document 명령 조합으로 즉석(?) 리소스 생성

# 마스터 노드 - 파드 2개 생성

$ cat <<EOF | kubectl create -f -

apiVersion: v1

kind: Pod

metadata:

name: pod-1

labels:

app: pod

spec:

containers:

- name: netshoot-pod

image: nicolaka/netshoot

command: ["tail"]

args: ["-f", "/dev/null"]

terminationGracePeriodSeconds: 0

---

apiVersion: v1

kind: Pod

metadata:

name: pod-2

labels:

app: pod

spec:

containers:

- name: netshoot-pod

image: nicolaka/netshoot

command: ["tail"]

args: ["-f", "/dev/null"]

terminationGracePeriodSeconds: 0

EOF

# 파드 확인

$ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod-1 1/1 Running 0 91s 172.16.1.3 k8s-w1 <none> <none>

pod-2 1/1 Running 0 91s 172.16.2.4 k8s-w2 <none> <none>

파드 생성전에

watch -d "ip link | egrep 'cni|veth' ;echo; brctl show cni0"명령어를 행하면 cni0를 찾을 수 없다고 합니다. 당황하지 말고, 파트 생성을 진행하여 보세요 아래의 이미지와 같은 결과를 얻게 됩니다.

- 파드가 노드1대에 몰려 배포 시 사용하자

cat <<EOF | kubectl create -f -

apiVersion: v1

kind: Pod

metadata:

name: pod-3

labels:

app: pod

spec:

nodeName: k8s-w1

containers:

- name: netshoot-pod

image: nicolaka/netshoot

command: ["tail"]

args: ["-f", "/dev/null"]

terminationGracePeriodSeconds: 0

---

apiVersion: v1

kind: Pod

metadata:

name: pod-4

labels:

app: pod

spec:

nodeName: k8s-w2

containers:

- name: netshoot-pod

image: nicolaka/netshoot

command: ["tail"]

args: ["-f", "/dev/null"]

terminationGracePeriodSeconds: 0

EOF

파드 생성 후 정보 확인

# 워커노드1

$ cat /run/flannel/subnet.env

FLANNEL_NETWORK=172.16.0.0/16

FLANNEL_SUBNET=172.16.1.1/24

FLANNEL_MTU=1450

FLANNEL_IPMASQ=true

# 브리지 정보 확인

$ brctl show cni0

bridge name bridge id STP enabled interfaces

cni0 8000.8e53f02dd93a no veth09e7e37a

vethe914fc0b

# 브리지 연결 링크(veth) 확인

$ bridge link

7: vethe914fc0b@enp0s8: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 master cni0 state forwarding priority 32 cost 2

8: veth09e7e37a@enp0s8: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 master cni0 state forwarding priority 32 cost 2

# 브리지 VLAN 정보 확인

$ bridge vlan

port vlan ids

docker0 1 PVID Egress Untagged

cni0 1 PVID Egress Untagged

vethe914fc0b 1 PVID Egress Untagged

veth09e7e37a 1 PVID Egress Untagged

# **cbr**(**c**ustom **br**idge) 정보 : kubenet CNI의 bridge - [링크](https://kubernetes.io/ko/docs/concepts/extend-kubernetes/compute-storage-net/network-plugins/#kubenet)

$ tree /var/lib/cni/networks/cbr0

/var/lib/cni/networks/cbr0

├── 172.16.0.6

├── 172.16.0.7

├── last_reserved_ip.0

└── lock

# 네트워크 관련 정보들 확인

$ ip -c addr | grep veth -A3

7: vethe914fc0b@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master cni0 state UP group default

link/ether 0a:44:6f:7b:aa:07 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet6 fe80::844:6fff:fe7b:aa07/64 scope link

valid_lft forever preferred_lft forever

8: veth09e7e37a@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master cni0 state UP group default

link/ether a6:44:a7:30:50:97 brd ff:ff:ff:ff:ff:ff link-netnsid 1

inet6 fe80::a444:a7ff:fe30:5097/64 scope link

valid_lft forever preferred_lft forever

$ ip -c route

default via 10.0.2.2 dev enp0s3 proto dhcp src 10.0.2.15 metric 100

10.0.2.0/24 dev enp0s3 proto kernel scope link src 10.0.2.15

10.0.2.2 dev enp0s3 proto dhcp scope link src 10.0.2.15 metric 100

172.16.0.0/24 dev cni0 proto kernel scope link src 172.16.0.1

172.16.1.0/24 via 172.16.1.0 dev flannel.1 onlink

172.16.2.0/24 via 172.16.2.0 dev flannel.1 onlink

172.17.0.0/16 dev docker0 proto kernel scope link src 172.17.0.1 linkdown

192.168.100.0/24 dev enp0s8 proto kernel scope link src 192.168.100.10

# 아래 mac 정보는 다른 노드들의 flannel.1 의 mac 주소 입니다

$ ip -c neigh show dev flannel.1

172.16.2.0 lladdr 86:8f:47:89:1b:da PERMANENT

172.16.1.0 lladdr 1e:af:73:b0:c0:c3 PERMANENT

$ bridge fdb show dev flannel.1

76:4d:5d:82:42:b7 dst 192.168.100.102 self permanent

1e:af:73:b0:c0:c3 dst 192.168.100.101 self permanent

86:8f:47:89:1b:da dst 192.168.100.102 self permanent

16:43:ee:68:3c:08 dst 192.168.100.101 self permanent

$ ip -c -d addr show flannel.1

5: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN group default

link/ether 42:44:84:8c:89:dd brd ff:ff:ff:ff:ff:ff promiscuity 0 minmtu 68 maxmtu 65535

vxlan id 1 local 192.168.100.10 dev enp0s8 srcport 0 0 dstport 8472 nolearning ttl auto ageing 300 udpcsum noudp6zerocsumtx noudp6zerocsumrx numtxqueues 1 numrxqueues 1 gso_max_size 65536 gso_max_segs 65535

inet 172.16.0.0/32 brd 172.16.0.0 scope global flannel.1

valid_lft forever preferred_lft forever

inet6 fe80::4044:84ff:fe8c:89dd/64 scope link

valid_lft forever preferred_lft forever

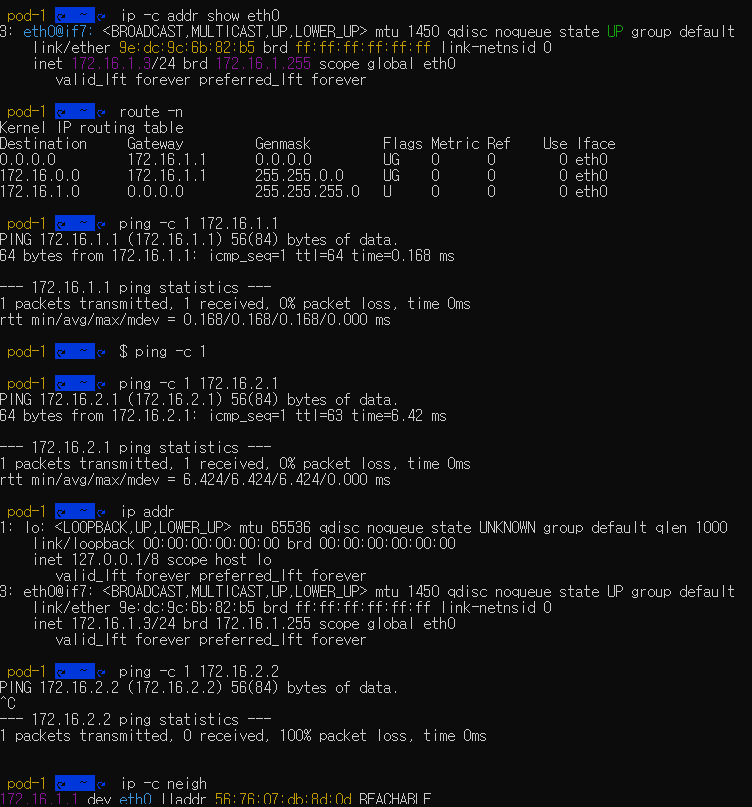

파드 Shell 접속 후 확인

$ kubectl exec -it pod-1 -- zsh

$ ip -c addr show eth0

3: eth0@if7: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group default

link/ether 9e:dc:9c:6b:82:b5 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.16.1.3/24 brd 172.16.1.255 scope global eth0

valid_lft forever preferred_lft foreverforever

# GW IP는 어떤 인터페이스인가? (1) flannel.1 (2) cni0

$ route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 172.16.2.1 0.0.0.0 UG 0 0 0 eth0

172.16.0.0 172.16.2.1 255.255.0.0 UG 0 0 0 eth0

172.16.2.0 0.0.0.0 255.255.255.0 U 0 0 0 eth0

$ ping -c 1 <GW IP>

PING 172.16.1.1 (172.16.1.1) 56(84) bytes of data.

64 bytes from 172.16.1.1: icmp_seq=1 ttl=64 time=0.168 ms

--- 172.16.1.1 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.168/0.168/0.168/0.000 ms

$ ping -c 1 <pod-2 IP>

PING 172.16.2.1 (172.16.2.1) 56(84) bytes of data.

64 bytes from 172.16.2.1: icmp_seq=1 ttl=63 time=6.42 ms

--- 172.16.2.1 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 6.424/6.424/6.424/0.000 ms

$ ip -c neigh

172.16.1.1 dev eth0 lladdr 56:76:07:db:8d:0d REACHABLE

참고 자료

- K8S Docs - Components & Cluster Architecture & Pods & Learn Kubernetes Basics & 치트 시트

- K8S Blog (v1.22) - New Peaks & Nginx-Ingress & API

- CNCF - 링크 & CNCF Landscape - 링크

- 44bits(Youtube) - 초보를 위한 쿠버네티스 안내서 & Subicura(WebSite) - 실습편

- [devinjeon] Container & Pod - 링크

- [learnk8s] Tracing the path of network traffic in Kubernetes - 링크

- (심화) ETCD 기본 동작 원리의 이해 - 링크 & ETCD? - 링크